This story begins from me wanting to be a good little sysadmin and make sure all our services are properly monitored.

Netdata is a rather handy tool for monitoring servers. It can draw pretty graphs of all kinds of metrics from the various services running on the machine and from the operating system itself. It can also be configured to send out alerts when one of the metrics has reached a certain threshold (eg. a disk is getting full, a service is suddenly using a lot of CPU, a web server is returning a suspiciously high number of 5xx responses, or a service is completely unresponsive).

A web server cluster I am responsible for was already being monitored with Netdata. It was tracking the database services, the nginx web servers and the haproxy loadbalancers, along with the usual plethora of operating system metrics. But I had noticed that one thing not being tracked was the PHP-FPM service, responsible for serving the PHP scripts used on the server. It’s good to get statistics for this service as it can be very helpful for performance tuning, and of course it’s also very useful to know if the service has died.

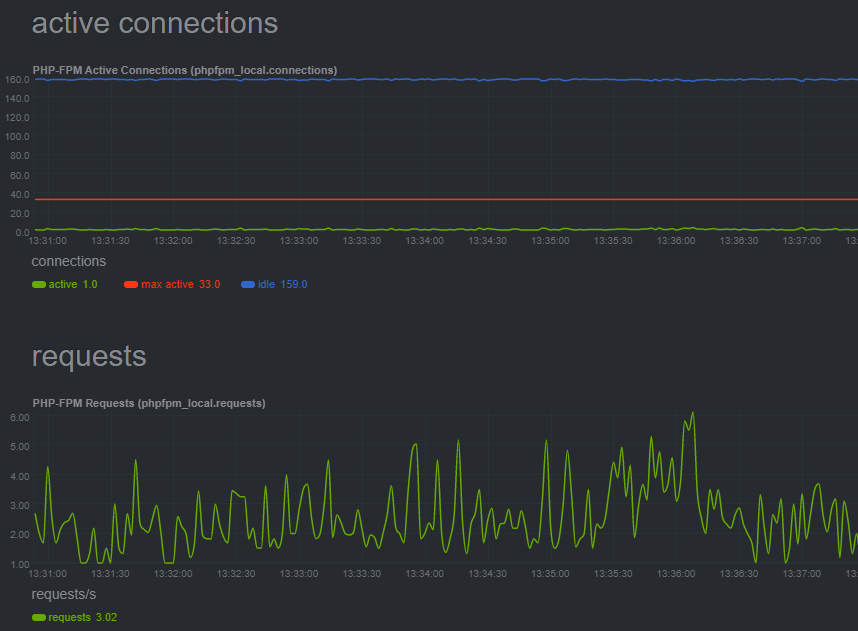

Netdata does have a PHP-FPM module, so all I needed to do was configure all parts correctly so that PHP-FPM was serving the relevant status page, nginx was forwarding the requests and allowing access to it, and Netdata had the correct address to get the statistics from. Not a problem at all; I set up the config, checked that we were now getting the pretty graphs and was satisfied with a job well done.

Of course the story doesn’t end there. That would be a very boring story. Not that this story is particularly exciting mind you, but you’ve read this far at least…

The noise

Not long after setting up the check, we started seeing alerts in our monitoring channel, letting us know that the PHP-FPM service was down. A minute or two later the service recovered and we got the alert telling us everything was fine again. This would happen typically several times per day, at seemingly random times. Sometimes it would affect all servers in the cluster at around the same time, other times it would just affect one of them.

It was odd really; although the services were being reported as down, we didn’t see any degradation in the service. It was apparently a phantom alert, serving no purpose other than to annoy us and potentially hide more important alerts within the noise, though perhaps there was a hidden underlying problem that they were triggering on?

Who to blame?

At first I wondered if the very frequent checks themselves were the problem. Maybe the service was being overwhelmed with requests. But that’s where the graphs come in. They show exactly how close the service is to reaching its limits, and they very clearly showed that it was not even close to full capacity.

Maybe Netdata was configured wrong and being too sensitive? Either not giving the service enough time to respond or alerting after only one failed check, or a combination of both of those things. But… this didn’t seem to be the case either. Certainly we could rule out the latter as we could see the gaps in the graph corresponding with the times the alerts were sent out, so it was definitely not able to record metrics during those times.

To test if the service was responding slowly (or not at all), I wrote a simple shell script to fetch from the same endpoint that Netdata was using and record the time taken for each request. I set it running and it showed that everything was apparently fine. I left it running until the next alert came along, and sure enough, my script noticed nothing wrong at all during this time.

I modified my script to save a copy of the response returned by the PHP-FPM service, just to see if it was returning an empty response, an error, or something else obviously wrong. Obviously this generated a lot of files, so I only ran it for a little while, but it seemed like the files it was getting were okay. Surely it had to be something wrong with Netdata itself?

One red herring

I checked the web server logs to see if anything was amiss. Nothing had showed up in the error logs, but looking into the access logs I could see the checks coming in from Netdata. One curious thing was that during the times that Netdata reported the service as down, the checks came in less frequently. Here’s a log excerpt:

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50405,"generation_time_milli": 0.003,"date": "2020-12-14T12:55:41+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50430,"generation_time_milli": 0.003,"date": "2020-12-14T12:55:42+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50381,"generation_time_milli": 0.003,"date": "2020-12-14T12:55:43+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50374,"generation_time_milli": 0.004,"date": "2020-12-14T12:55:46+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50185,"generation_time_milli": 0.003,"date": "2020-12-14T12:55:49+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50374,"generation_time_milli": 0.007,"date": "2020-12-14T12:55:52+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 50797,"generation_time_milli": 0.002,"date": "2020-12-14T12:55:55+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 51184,"generation_time_milli": 0.003,"date": "2020-12-14T12:55:58+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 51869,"generation_time_milli": 0.002,"date": "2020-12-14T12:56:04+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 51698,"generation_time_milli": 0.004,"date": "2020-12-14T12:56:10+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 52097,"generation_time_milli": 0.003,"date": "2020-12-14T12:56:16+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 52464,"generation_time_milli": 0.004,"date": "2020-12-14T12:56:22+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 52498,"generation_time_milli": 0.004,"date": "2020-12-14T12:56:28+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 52897,"generation_time_milli": 0.010,"date": "2020-12-14T12:56:37+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 52866,"generation_time_milli": 0.003,"date": "2020-12-14T12:56:38+00:00"}

{"ip": "127.0.0.1","host": "localhost","path": "/status?full&json","status": "200","referrer": "-","user_agent": "-","length": 52841,"generation_time_milli": 0.024,"date": "2020-12-14T12:56:39+00:00"}

Taking a look at those timestamps:

12:55:41 12:55:42 (+1 second) 12:55:43 (+1 second) 12:55:46 (+3 seconds) 12:55:49 (+3 seconds) 12:55:52 (+3 seconds) 12:55:55 (+3 seconds) 12:55:58 (+3 seconds) 12:56:04 (+6 seconds) 12:56:10 (+6 seconds) 12:56:16 (+6 seconds) 12:56:22 (+6 seconds) 12:56:28 (+6 seconds) 12:56:37 (+9 seconds) 12:56:38 (+1 second) 12:56:39 (+1 second)

Well that was a little odd. The timing of 1 → 3 → 6 → 9 seconds increasing every 5 attempts seems to be a very deliberate-looking pattern. The alert happened around the same time, could this be a sign of what’s going wrong?

Well no, and I had a suspicion already that I might merely be looking at a symptom of the problem here, and it turns out that suspicion was right. Netdata does deliberately back off when it doesn’t get a response from the server (so as to not inundate the server with requests), and that was what we were seeing here with the increasing delays between requests. But the odd thing was that during this time, all of the responses from the server were "status": "200", which is HTTP parlance for “everything is OK!”. Why was Netdata thinking the service was down if the response was OK?

To the source

To try and eliminate Netdata from the list of suspects I had a look at the Python source for the PHP-FPM check in Netdata. Nothing seemed out of the ordinary though: it was fetching from the URL, parsing the response and getting the relevant data. I couldn’t see anything obviously wrong with it.

The end?

One last thing I did that day was to configure some additional checks for nginx in Netdata to see if it would also show errors at the same time as the PHP-FPM alerts happened. Since the alerts were infrequent and unpredictable I had to wait and see. Sure enough, when the next alert came in, it was still only PHP-FPM having issues – Netdata was still getting good results in from the nginx service.

Eventually I decided to give up, and leave it as something to look at later. It had defeated me that day, but I would return one day and I would figure it out, of that I was sure.

Continued in part two.