Looking back, it seems so obvious now. Why hadn’t I done the one simple test that would have answered this problem ages ago?

Continued from part one.

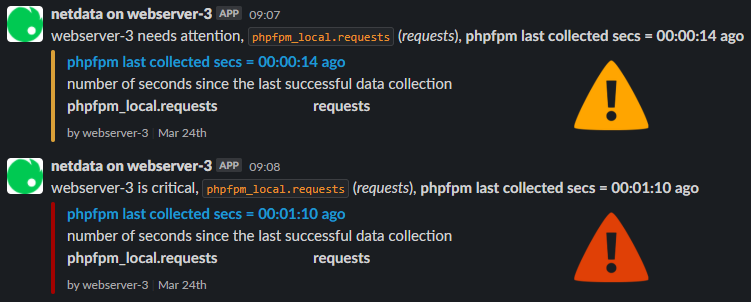

The check was noisy, but it was producing useful graphs, and though I could have disabled the alerts, they were a handy reminder that “you should really look into fixing that when you get the chance”. And so they stayed…

For 3 months.

Although I had taken a cursory glance at the problem a couple of times during those 3 months, I hadn’t made further any progress at all. Eventually though the incessant alerts got to me, and I decided to take another proper look at the situation.

The first thing I did (which for some reason I hadn’t done before) was to take a look at the logs for Netdata itself when the alerts were triggered. This was one such log excerpt:

2021-03-23 07:51:02: alarm-notify.sh: INFO: sent slack notification for: webserver-3 phpfpm_local.requests.phpfpm_last_collected_secs is WARNING to '#alerts' 2021-03-23 07:51:52: alarm-notify.sh: INFO: sent slack notification for: webserver-3 phpfpm_local.requests.phpfpm_last_collected_secs is CRITICAL to '#alerts' 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.connections' on host 'webserver-3' is 95.00216 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.requests' on host 'webserver-3' is 95.00376 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.performance' on host 'webserver-3' is 95.00378 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.request_duration' on host 'webserver-3' is 95.00374 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.request_cpu' on host 'webserver-3' is 95.00366 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.request_mem' on host 'webserver-3' is 95.00363 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:52:21: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'netdata.runtime_phpfpm_local' on host 'webserver-3' is 95.00360 secs in the past (counter #3619122, update #3578054). Adjusting it to current time. 2021-03-23 07:57:32: alarm-notify.sh: INFO: sent slack notification for: webserver-3 phpfpm_local.requests.phpfpm_last_collected_secs is CLEAR to '#alerts'

This one unfortunately didn’t tell me much. It shows it sending alerts for the service being down, then after a short while noticing that the PHP-FPM graphs are now out of sync (probably at the point of recovery of the service), and then 5 minutes later reporting that everything is okay again.

No real new information there, but then I found another occurrence that was a lot more promising:

2021-03-23 11:06:28: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:29: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:30: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:31: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:32: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:35: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:38: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:41: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:43: alarm-notify.sh: INFO: sent slack notification for: webserver-3 phpfpm_local.requests.phpfpm_last_collected_secs is WARNING to '#alerts' 2021-03-23 11:06:44: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:47: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:53: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:06:59: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:05: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:11: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:17: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:26: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:32: alarm-notify.sh: INFO: sent slack notification for: webserver-3 phpfpm_local.requests.phpfpm_last_collected_secs is CRITICAL to '#alerts' 2021-03-23 11:07:35: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:44: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:07:53: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:08:02: python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.connections' on host 'webserver-3' is 105.99837 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.requests' on host 'webserver-3' is 105.99843 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.performance' on host 'webserver-3' is 105.99845 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.request_duration' on host 'webserver-3' is 105.99847 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.request_cpu' on host 'webserver-3' is 105.99864 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'phpfpm_local.request_mem' on host 'webserver-3' is 105.99865 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:08:13: netdata INFO : PLUGINSD[python.d] : RRD database for chart 'netdata.runtime_phpfpm_local' on host 'webserver-3' is 105.99864 secs in the past (counter #103, update #104). Adjusting it to current time. 2021-03-23 11:13:23: alarm-notify.sh: INFO: sent slack notification for: webserver-3 phpfpm_local.requests.phpfpm_last_collected_secs is CLEAR to '#alerts'

Same alerts and chart update messages, but this python.d ERROR: phpfpm[localhost] : update() unhandled exception: 'processes' message that it was repeatedly reporting looked suspiciously like it might point to the actual cause of the problem!

Unfortunately there is no filename or line number with the error message, but I looked again at the source of Netdata’s PHP-FPM check, this time searching for any mention of the string “processes“.

The only thing I could find that I felt might be connected was this:

for process in [p for p in raw_json['processes'] if p['state'] == 'Idle']:

p_info.update(fetch_data_(raw_data=process, metrics_list=PER_PROCESS_INFO, pid=str(process['pid'])))If it was indeed this point at which the check was failing then it meant that Netdata had received the status report from PHP-FPM okay, but that it was having trouble parsing some data from it (and continued to have trouble parsing it for minutes at a time).

I was now determined to find out what output the PHP-FPM endpoint could possibly be giving to cause such a problem, so I waited for the next alert from the cluster, ready to catch the output myself. Fortunately I didn’t have to wait long, so as soon as the next alert came in, I ran the following command:

wget -q -O - 'http://localhost/status?full&json'What I got back from that command was about the same as what I’d always seen – a large block of text filling up the terminal window that looked like typical JSON. Staring at so much text trying to find a problem wasn’t going to be easy. To really see the JSON properly I decided to pretty-print it to make it more human-readable, so I ran:

wget -q -O - 'http://localhost/status?full&json' | json_ppI was expecting to see a nicely indented tree structure of JSON so that I could pinpoint the missing or problematic data, but instead the result I got back was just one line:

invalid character encountered while parsing JSON string, at character offset 36949 (before "\njhjfdgh@sybbr>r.ec...") at /usr/bin/json_pp line 51.

The JSON was invalid! Why hadn’t I thought to check that before?

Real progress

Armed with this new information, I took a closer look at the error. Is that a \n newline character I see there? I looked at the original output again. Sure enough, I hadn’t noticed it in the wall of text before, but there was quite clearly an actual line break (not an encoded one) in the middle of a JSON string.

Yeah, that would do it, that’s definitely not valid JSON. Control characters within strings must be backslash-escaped, and that includes newlines and carriage returns.

Where in the JSON was this errant newline? It was in the "request uri" parameter of one of the entries in the "processes" array. The request URI! The seemingly-random nature of the alerts suddenly makes sense if it’s being triggered by user requests.

Reproduce the problem

First thing to figure out is: can I craft a request to the web server that triggers this issue? Should be easy enough if it is as simple a matter of including a newline character in the URI. But an actual newline character wouldn’t work. This example HTTP request would be immediately rejected by the web server:

GET /foo

bar HTTP/1.1So then it must be an encoded newline. URIs use percent-encoding and the corresponding encodings of newline and carriage return characters are %0A and %0D respectively.

Would it be this simple? Only one way to find out, so:

wget -q -O - 'http://localhost/foo%0abar'Sure enough, the alerts came in shortly afterwards. Problem reproduced!

The triggering of alerts

It soon became clear that as long as the process that handled that request had not been reused for another request, the JSON from the status page would remain invalid. Once the process was given a new (non-triggering) URI to work on, the JSON would become valid again. If it remained invalid long enough to hit Netdata’s alert threshold, then we’d get the annoying alerts.

As an aside, this would actually mean that the alerts would take longer to resolve themselves when the server was under light load. When under heavy load, the process would be reused more rapidly, and thus the problem would go away quicker (possibly even quickly enough to not send any alert at all).

Who to blame? (part two)

My first instinct was to blame either nginx or our configuration of nginx. Surely, I reasoned, it wasn’t right that the web server was decoding the URLs and then passing raw newlines to the CGI server?

So I looked for a while into ways to force nginx to not decode the request URI, but I didn’t find anything of use. The only things I found were:

- A now-fixed security vulnerability in PHP-FPM that also involved

%0Aand%0Dcharacters. - Someone arguing that reversing the decoding was a bad idea, and that nginx was in fact right to be doing what it was doing, per the CGI spec.

Still being unsure myself of whether it was nginx or PHP-FPM to blame, but giving up on the idea of trying to change what was being passed on by nginx, I tried something simpler: I knew the web application well, and was quite certain that there were no legitimate cases in which a newline or carriage return should exist in the request URI (not even in the query string). So I simply added a rule in nginx that blocked any request containing either %0A or %0D:

if ( $request_uri ~* "%0A|%0D" ) { return 400; }Feeling reasonably confident that this would stop the alerts, I left this in place to see what would happen.

Surprise!

The alerts did not stop.

Fortunately I was paying close attention and was able to grab the output from PHP-FPM’s status page before the alert went away. So I again passed it through json_pp to see what was wrong now:

, or } expected while parsing object/hash, at character offset 17509 (before "&","content length":...") at /usr/bin/json_pp line 51.

It turned out that this time it was an unescaped double-quote (") in the request URI that was to blame. Again, I tried to reproduce it myself:

wget -q -O - 'http://localhost/foo%22bar'Sure enough, the PHP-FPM status page immediately became invalid again.

This posed a problem. I knew that blocking requests containing %22 would be problematic because there are various legitimate cases for the URI to contain this (eg. a %22 in a query string would be quite possible if someone were using the website’s search form).

Who to blame? (part three)

At the same time, this had also sealed the deal for me on where the blame lay for this problem. I knew now that nginx was completely blameless – this was 100% on PHP-FPM. It was clearly not applying even the most basic JSON string escaping when generating its status page. Just to check, I also tried with a percent-encoded backslash at the end of the URI (/foo%5C) and this too made the status page break.

I did a search on PHP’s bug tracker, and found two old bugs:

- Bug #66119: FPM full status produces invalid JSON with double quotes in query string

- Bug #64539: FPM status page: JSON parse error

Given that both of these bugs were now over 7 years old, I figured there was sadly little chance of seeing a fix for the problem any time soon, so I had to find another way.

Another way

The documentation for the PHP-FPM module in Netdata, and the example configuration file for it both indicated that it should be given a URL of the form http://localhost/status?full&json for getting the data from PHP-FPM. Note the inclusion of both the “full” and “json” parameters. Obviously the json parameter was essential as the module was expecting a JSON response. But did we really need the full parameter?

The output from the status page when providing the full parameter looks something like this:

{

"accepted conn" : 1441292,

"active processes" : 1,

"idle processes" : 159,

"listen queue" : 0,

"listen queue len" : 0,

"max active processes" : 48,

"max children reached" : 0,

"max listen queue" : 0,

"pool" : "www",

"process manager" : "static",

"processes" : [

...

(massive list of processes, snipped)

...

],

"slow requests" : 0,

"start since" : 566521,

"start time" : 1617017317,

"total processes" : 160

}Without the full parameter it looks like:

{

"accepted conn" : 1441605,

"active processes" : 1,

"idle processes" : 159,

"listen queue" : 0,

"listen queue len" : 0,

"max active processes" : 48,

"max children reached" : 0,

"max listen queue" : 0,

"pool" : "www",

"process manager" : "static",

"slow requests" : 0,

"start since" : 566681,

"start time" : 1617017317,

"total processes" : 160

}The only thing missing from the latter is the details about the individual processes, and it was within this section that the problem lay. So, would Netdata complain if I only gave it the brief summary? Having previously looked at the source code I was pretty sure that the answer was “it will cope just fine, we’ll just lose some of the graphs”.

I was not wrong. After changing it to use http://localhost/status?json the 3 graphs of “active connections”, “requests” and “performance” continued to receive data. The per-process graphs of “duration”, “CPU” and “memory” stopped getting data.

And so I asked myself the most important question: “is the loss of these 3 graphs a price I am willing to pay to not have these annoying alerts any more?”.

The answer was a resounding “yes”.

The end?

That is pretty much the end of the story. We no longer receive annoying false alerts for the PHP-FPM service, but if the service has a genuine problem we will still get alerts about that, so we’re happy. The graphs we lose are not the most essential ones either.

As long as this bug exists in PHP-FPM’s status page, I believe it might be a good idea for Netdata to update the documentation for their plugin to no longer recommend the use of the full parameter, or at the very least to indicate that it is optional, but beyond that… we just need to wait for PHP to fix their JSON encoding bug.

Escape your strings!

Just begun digging into the same bug today, voted on the php bugs to hopefully make them somewhat more visible. There should be no excuse for unescaped json these days.

Thank you for bringing some attention to those bugs! Maybe we’ll see a fix some day ☺

Thank you for the detailed analysis. I’ve experienced exactly the same issue when someone was letting a security scanner loose on our website. Voted on the PHP bug.

Thank you sir for this! Also voted for the php bug.

It seems like this bug has been fixed in PHP 8.1.20 and 8.2.7.

https://github.com/php/php-src/commit/5e64ead64ab5eaba5d62847483c847c1836171d7

Good news, thanks for the update! Though I’m concerned that they only seem to be encoding the query string in this way, and not the entire request URI. I’ll need to do some more testing once we have this version.